Training a transformer to predict the gender of German nouns

Note: the implementation of this project is found here: der_die_das (github.com/jsalvasoler)

Context

I wanted to do this for a while. A challenge for all German learners is to learn the gender of words. German nouns have three articles: masculine (der), feminine (die), and neuter (das). I will call the later gender neutrum (it’s German) because I had never heard off neuter before.

There are of course gendering rules that generally work. Check, for instance, this resource: der - die - das (Duolingo). However, these rules are complex and come with exceptions. Over time, German learners develop some intuition on the article of words and can make more accurate guesses on new words. The intuition is based on a combination of rule memorization (e.g.: words ending in -ung are feminine), and similarity guesses (I know that Thema is neutrum → Lemma should also be neutrum)

But look at the next three words:

- Der Löffel (the spoon) - Masculine

- Die Gabel (the fork) - Feminine

- Das Messer (the knife) - Neuter

I know the gender of these words, but using my intuitive gendering, I would have guessed masculine for all of them 🙃. And this is what German learners complain about:

German learners complain about the nouns having especially unintuitive and arbitrary genders. They claim the few gendering rules are complex and full of exceptions.

So that is what I wanted to study from a Machine Learning perspective:

- How well can a model learn the German genders?

- Assuming the same dataset size and same model architecture: Are German genders more difficult to classify compared to other languages?

Gathering datasets

I started by finding the German dataset from this frequency list. This one seemed to be nice for my purposes, and I just had to clean up a bit some plural nouns or nouns that were made of two words.

I wanted to compare German to other languages, and I chose these two:

-

Catalan: it is my native language, and I was really curious to see how the model would do on it. However, it only has two genders. My hypothesis for Catalan was that the gendering is very intuitive and “machine learnable”. I couldn’t find a similar dataset, and I thought it made sense to translate word by word using the Microsoft Translator API. I’d recommend using the Python library deep-translator.

-

Croatian: it is a perfect comparison to German because it also has three genders. My girlfriend speaks it, and it really helped because I didn’t know that, but Croatian has genders but no articles (I went to store). The problem was that I was translating the German dataset to Croatian, and of course it would translate der Apfel → jabuka, so I would lose the gender 😢. Then my Croatian-native collaborator suggested the trick of translating the word along with their possessive pronoun, e.g., mein Apfel → moja jabuka. And I could extract the gender of the Croatian words like this: moj → masculine, moja → feminine, and moje → neutrum. Nice.

A nice byproduct of this project is that it provides “noun” datasets for German, Croatian, and Catalan. These are tables with > 2k nouns and their gender. This can be used to power flashcard-based language learning apps, or to train other models.

- German: 2539 unique nouns

- Croatian: 2196 unique nouns

- Catalan: 2250 unique nouns

Training and evaluation setup

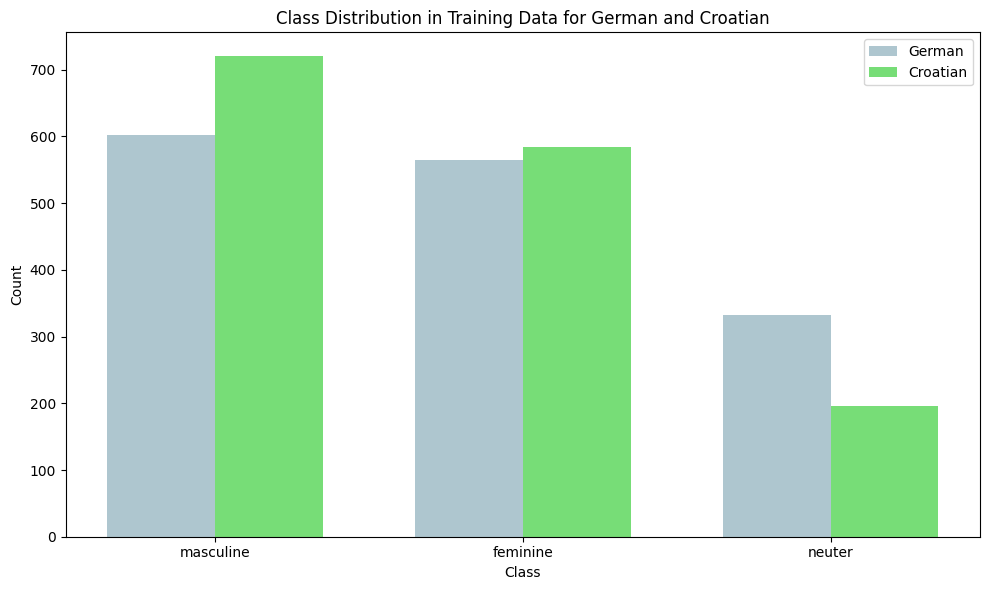

For the three languages, I split the processed dataset into a training and test dataset of sizes 1500 and 500. It is a stratified split on the variables (1) gender, and (2) length of word. Here I plot the class distributions of the words in German and Croatian. I do it just for the training set because thanks to stratification, we know that these plots would look almost identical in the test sets.

For Catalan, 845 words are masculine (56.33%), and 655 words are feminine (43.67%).

Majority class classifier as a baseline

It’s good to keep in mind that we can always have a model with the same accuracy as the proportion of samples of the most populated class. This gives the baseline models to beat:

- German: 0.4013

- Croatian: 0.4800

- Catalan: 0.5633

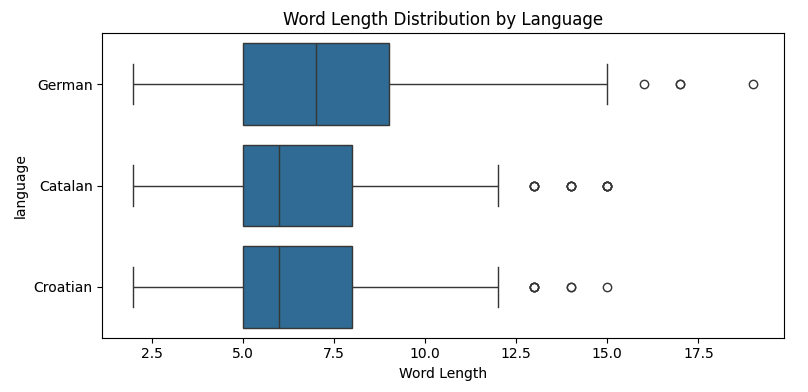

I was also interested in plotting the distribution of word lengths:

Model architecture

I trained a transformer classifier with the following components:

- Embedding Layer: converts input token indices into dense vector representations

- Positional Encoding: adds positional information to the token embeddings

- Transformer Encoder: applies multi-head self-attention and feed-forward networks

- Fully Connected Layer: maps the final representation to output class scores

Training settings:

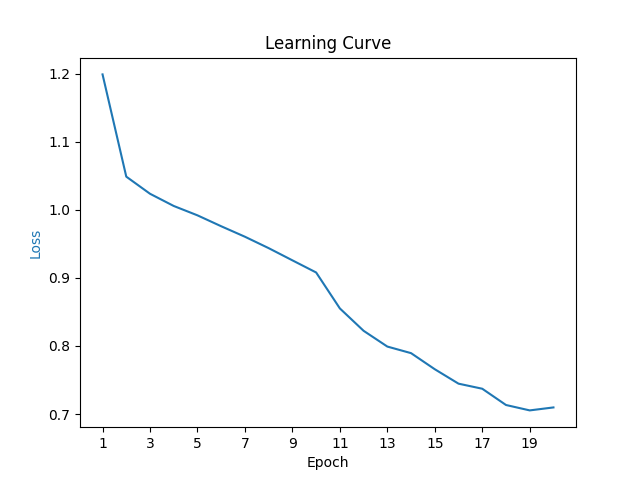

- Loss Function: CrossEntropyLoss

- Optimizer: Adam with learning rate 0.001

- Learning Rate Scheduler: StepLR

- Batch size: 32

- Epochs: 20

General results

Accuracy:

- German: 0.5860

- Croatian: 0.6240

- Catalan: 0.6540

F1 Score:

- German: 0.5559

- Croatian: 0.6239

- Catalan: 0.6271

All models beat the baseline majority class classifier 👏🏻.

Croatian is better classified than German by a large margin, suggesting that Croatian nouns can be gendered more intuitively, and that the rules to do that are more consistent. Catalan shows the highest accuracy, but keep in mind that for this language we have a binary classification problem. When looking at the F1-score, Croatian and Catalan are similar and superior to German.

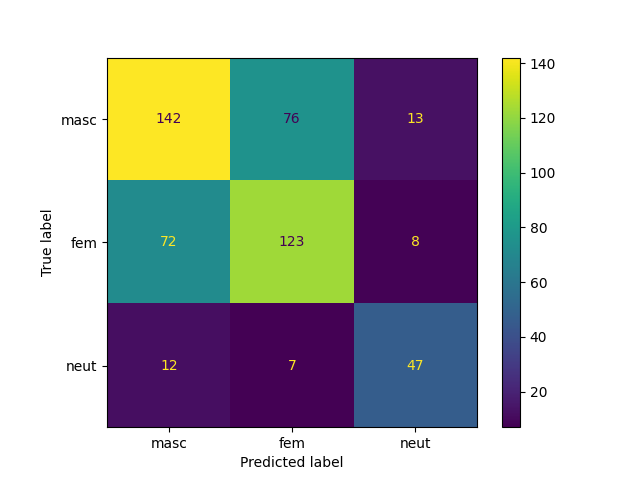

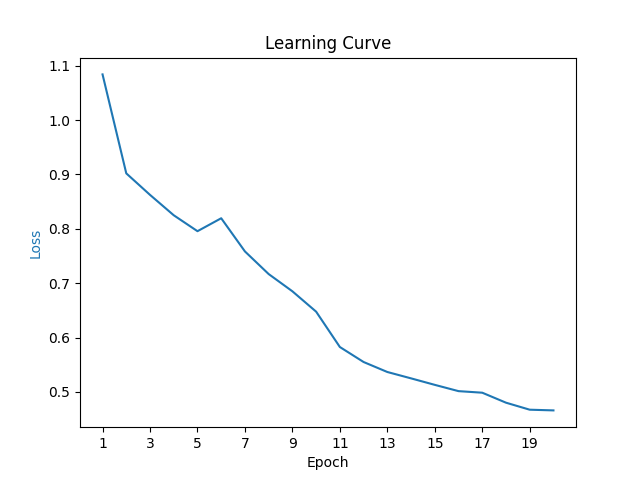

Results - German

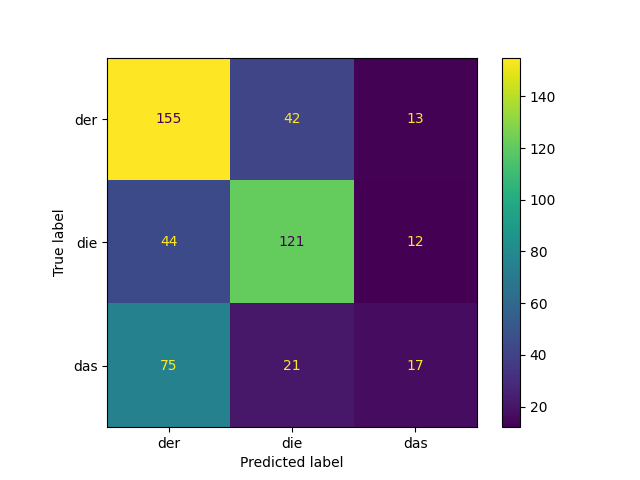

Detailed scores and confusion matrix

**Detailed Scores:** - Accuracy: 0.5860 - Precision: 0.5619 - Recall: 0.5860 - F1 Score: 0.5559 **Confusion Matrices:**

From the confusion matrix, we see that the most common errors of the model are:

- Predicting “der” when the correct article is “das”

- Predicting “der” when the correct article is “die”

- Predicting “die” when the correct article is “der”

Case study 1: Rule of -ung → feminine

There are 37/500 such words in the test set, and all of them are feminine, except for two: der Ursprung and die Ursprung. Naturally, our model classifies all 37 words as feminine, and fails on these two exceptions… I guess this is what the German learners complain about!

Case study 2: Rule of -um → neutrum

There are just five words with this ending in the test set, but two of them are exceptions to the rule! The model achieved 80% accuracy, better than the rule-based 60%.

Case study 3: Rule of -er → masculine

There are 51/500 words that end in -er, but again, this is a rule full of exceptions: 36 are indeed masculine, but 3 are feminine and 12 are neutrum. Our model presented 63% accuracy on these words.

Results - Croatian

Detailed scores and confusion matrix

**Detailed Scores:** - Accuracy: 0.6240 - Precision: 0.6239 - Recall: 0.6240 - F1 Score: 0.6239 **Confusion Matrices:**

For Croatian, the two most common errors were mixing up the masculine and feminine words. But this is simply explained by the higher distribution of words in these two genders. I have zero Croatian knowledge, and therefore I didn’t study the results further. But it’s nice to see that there is a language with three genders that are easier to identify compared to German.

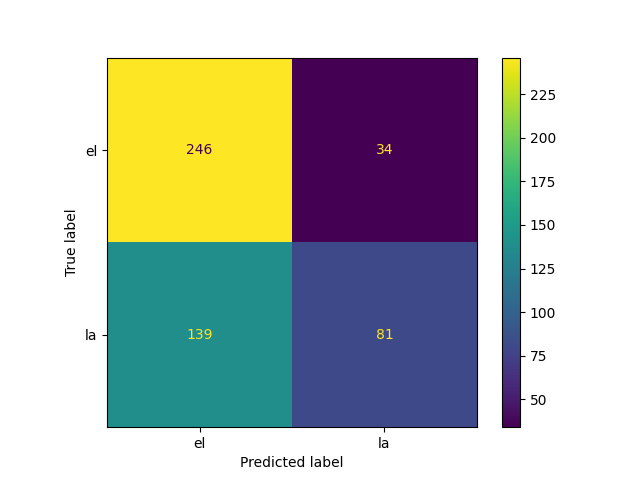

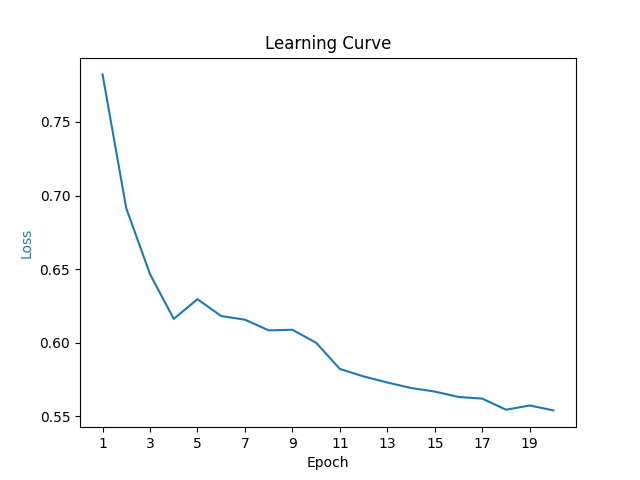

Results - Catalan

Detailed scores and confusion matrix

**Detailed Scores:** - Accuracy: 0.6540 - Precision: 0.6677 - Recall: 0.6540 - F1 Score: 0.6271 **Confusion Matrices:**

For Catalan, the most common error was predicting masculine for feminine words. This can be explained by the bias towards the masculine (most populated class) prediction.

Case study: Rule of words ending in -a → feminine

This rule is also true for many other languages. In the test dataset, there are 145/500 such words, and about 92% are feminine. This is a lot of exceptions! Can the model do well on this subset of the test dataset? Spoiler, no: we just get a 25% accuracy…

This suggests that the model architecture could be too complex and overlooks simple things like just looking at the ending of the word. But here I want to mention that we could have easily added human-knowledge into the model architecture, and it makes sense not to do it in order to be able to respond to our research questions better. Tuning the architecture to focus more on the ending of words makes sense… but it’s not what “learning genders by looking” at the word means.

Anyway, the model is still quite good at predicting the Catalan genders.

Lessons learned

- Hatch is handy: use it as your python project and environment manager! I also used an automatic code formatter and commit hooks, and I am sure I will always do it from now on.

- If you have an idea and a bit of time, just get your laptop and work on it! This was what happened with this project. But don’t forget to add it to your GitHub with a nice Readme.

- Using translation tools like Microsoft Translator API and libraries like deep-translator can facilitate dataset creation for languages where pre-existing datasets are unavailable. You can get something like 4M words to translate for free per month.

Enjoy Reading This Article?

Here are some more articles you might like to read next: